Find all related Progressive Post

Progressive Post

The EC’s recent proposal for AI regulation is the first of its kind in the world. It is an excellent base to shape a domain that opens enormous opportunities. But the door for abuses and wide interpretation by some member states, as well as by illiberal regimes worldwide, is not entirely closed yet. And against the backdrop of a technology that can be highly invasive to workers’ rights, better protection is still necessary.

On Wednesday 21 April, the Commission unveiled the long-awaited proposal to regulate AI. It can be considered the first endeavour of this kind across the world, which is why expectations were very high, in Europe, as well as abroad. Regulating the use of fast-evolving technologies with such broad implications on all sectors of our economies and societies is undoubtedly no easy task. A task that required years of work, study, consultations with a wide range of stakeholders.

The European Parliament actively contributed to this effort from the beginning, calling on the Commission to establish a legislative framework on AI as early as 2017, with its latest Resolutions on AI from last October as well as with the ongoing work of the Special Committee on Artificial Intelligence. From a first glance at the proposal, we can notice a range of undoubtedly positive elements, while some challenges remain.

First of all, despite having announced for months that the focus would only be on high-risk applications, the draft proposal introduces four categories of applications, with a different degree of obligations. From the low-risk ones, like the management of a high volumes of non-personal data, to the ones requiring transparency, such as chatbots, going to the high-risk ones, like robotic surgical devices and predictive tools for granting and revoking social benefits, for making decisions on termination of employment contracts, and, finally, to the prohibited applications. This is undoubtedly a response to the concerns that many in the Parliament, but also civil society organisations, have been voicing over the last months, demanding a more nuanced approach rather than a mere binary one.

In particular, in March we sent a letter to President von der Leyen and the involved Commissioners, co-signed by 116 MEPs from across the political spectrum, to demand putting fundamental rights first when regulating AI. In her reply, von der Leyen shared our concerns, and confirmed the need to “go further” than strict obligations, in case of applications that would be clearly incompatible with fundamental rights. The explicitly prohibited uses listed in article 5 of the draft Regulation – subliminal manipulation, exploitation of vulnerable groups for harmful purposes, social scoring, mass surveillance for example – confirm this commitment. The accent on immaterial damage to persons and society is also to be appreciated, as well as the strict requirements on high-quality datasets, robust cybersecurity, and human oversight for high-risk applications. However, several aspects remain to be clarified.

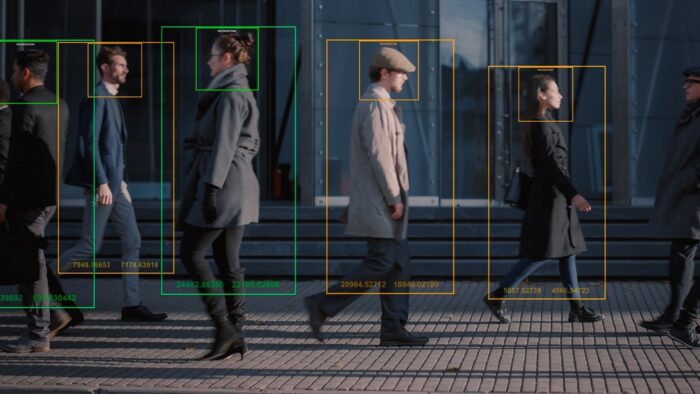

The issue that has attracted the most attention by commentators and on which Commissioners Margarethe Vestager and Thierry Breton spent most of their presentation on 21 April is the one on real-time remote biometric identification systems in public spaces for law enforcement purposes. Compared to the leaked text which was circulated a week prior to the presentation of the proposal, and which generated a lot of discussions and concerns, the draft article 5, point d) and subsequent paragraphs provide for a more detailed and limited framework for the implementation of such practice, restricting the cases in which it can be used (now limited to cases such as child abduction, imminent terrorist threats and the localisation of suspects of crimes punishable with over three years of detention) and making it subject to judicial authorisation and activation by national law. If this constitutes a substantial improvement compared to the leak, concerns persist as to remaining possible abuses and wide interpretation by some member states.

Another area to flag up is the one on labour rights: Annex III lists high-risk applications, including those that monitor and evaluate workers’ behaviour and performance, those that control the time a worker spends in front of the computer when teleworking, or even assess their mood by detecting emotions when making calls. According to article 43, detailing the conformity assessment procedures to follow, this and other sensitive applications can undergo an internal conformity assessment (or self-assessment) instead of a third-party one. Regulating so loosely a practice so invasive for workers’ rights can be very dangerous, even more so as we consider these rules will apply to all AI developers targeting the EU market, including non-EU entities, that might not necessarily share our values.

Affixing the CE mark autonomously would entail that potential violations would only be discovered at a later stage by overburdened market surveillance authorities, when damages have already occurred. If we consider that allocation of social benefits, illegal migration, or crime prevention are also on the same list, we can see the risk of undermining constitutional concepts such as the presumption of innocence or non-discrimination.

We cannot afford to make mistakes, in an era where authoritarian regimes are setting their own, illiberal standards. On the contrary, similarly to the case of GDPR, we have the unique opportunity to set a world standard for a human-centric, trustworthy AI, to allow our citizens and businesses to make the most of such a promising technology, whose benefits we already experience in a wide variety of sectors. The European Parliament stands therefore positive and ready to improve the text, to ensure appropriate safeguards are in place for high-risk applications, to stimulate good innovation and the creation of a true Internal Market for AI that serves humanity, and not only the interests of the few.

| Cookie | Duration | Description |

|---|---|---|

| cookielawinfo-checkbox-advertisement | 1 year | Set by the GDPR Cookie Consent plugin, this cookie is used to record the user consent for the cookies in the "Advertisement" category . |

| cookielawinfo-checkbox-analytics | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookie is used to store the user consent for the cookies in the category "Analytics". |

| cookielawinfo-checkbox-functional | 11 months | The cookie is set by GDPR cookie consent to record the user consent for the cookies in the category "Functional". |

| cookielawinfo-checkbox-necessary | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookies is used to store the user consent for the cookies in the category "Necessary". |

| cookielawinfo-checkbox-others | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookie is used to store the user consent for the cookies in the category "Other. |

| cookielawinfo-checkbox-performance | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookie is used to store the user consent for the cookies in the category "Performance". |

| csrftoken | past | This cookie is associated with Django web development platform for python. Used to help protect the website against Cross-Site Request Forgery attacks |

| JSESSIONID | session | The JSESSIONID cookie is used by New Relic to store a session identifier so that New Relic can monitor session counts for an application. |

| viewed_cookie_policy | 11 months | The cookie is set by the GDPR Cookie Consent plugin and is used to store whether or not user has consented to the use of cookies. It does not store any personal data. |

| Cookie | Duration | Description |

|---|---|---|

| __cf_bm | 30 minutes | This cookie, set by Cloudflare, is used to support Cloudflare Bot Management. |

| S | 1 hour | Used by Yahoo to provide ads, content or analytics. |

| sp_landing | 1 day | The sp_landing is set by Spotify to implement audio content from Spotify on the website and also registers information on user interaction related to the audio content. |

| sp_t | 1 year | The sp_t cookie is set by Spotify to implement audio content from Spotify on the website and also registers information on user interaction related to the audio content. |

| Cookie | Duration | Description |

|---|---|---|

| CONSENT | 2 years | YouTube sets this cookie via embedded youtube-videos and registers anonymous statistical data. |

| iutk | session | This cookie is used by Issuu analytic system to gather information regarding visitor activity on Issuu products. |

| s_vi | 2 years | An Adobe Analytics cookie that uses a unique visitor ID time/date stamp to identify a unique vistor to the website. |

| Cookie | Duration | Description |

|---|---|---|

| NID | 6 months | NID cookie, set by Google, is used for advertising purposes; to limit the number of times the user sees an ad, to mute unwanted ads, and to measure the effectiveness of ads. |

| VISITOR_INFO1_LIVE | 5 months 27 days | A cookie set by YouTube to measure bandwidth that determines whether the user gets the new or old player interface. |

| YSC | session | YSC cookie is set by Youtube and is used to track the views of embedded videos on Youtube pages. |

| yt-remote-connected-devices | never | YouTube sets this cookie to store the video preferences of the user using embedded YouTube video. |

| yt-remote-device-id | never | YouTube sets this cookie to store the video preferences of the user using embedded YouTube video. |

| yt.innertube::nextId | never | This cookie, set by YouTube, registers a unique ID to store data on what videos from YouTube the user has seen. |

| yt.innertube::requests | never | This cookie, set by YouTube, registers a unique ID to store data on what videos from YouTube the user has seen. |

| Cookie | Duration | Description |

|---|---|---|

| COMPASS | 1 hour | No description |

| ed3e2e5e5460c5b72cba896c22a5ff98 | session | No description available. |

| loglevel | never | No description available. |