The Progressive Post

“Like a boss” – management by algorithm is taking over at the workplace

All managerial functions are now entrusted to algorithm-powered tools, raising large expectations and new risks. This trend is not confined to platform work. No economic sector is immune to the adoption of such systems. The EU institutions are engaged in a promising process that may lead to new regulatory solutions, but social dialogue and collective bargaining will remain essential.

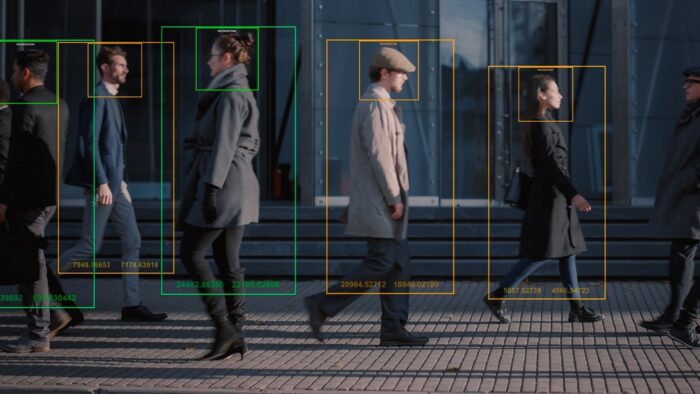

Facial recognition and shift scheduling, smart badges and QR codes, GPS tracking and wristbands, job applicants’ assessment, and health self-reporting have ballooned in the last year. The labour market is experiencing a bewildering dystopia, and to a certain extent, we are docilely enjoying it.

A long-lasting process of datafication of working relationships, combined with ubiquitous tracking devices, the dizzying blurring between professional and private lives, and enhanced reliance on digital devices, results in an enticing opportunity to redesign power dynamics at the workplace, thus aggravating existing information asymmetries. AI-driven and more mundane software are now widely used to complement the role of managers and supervisors in all their tasks, from onboarding to promoting, from monitoring to firing. Often marketed as unbiased, fraud-less, and objective, algorithms fuelling these practices are in fact abstract, formalised instructions to conduct a computational procedure aimed at achieving a result, by increasing efficiency and enhancing performance. Game-changing technologies reflect business needs and specific preferences and, on many occasions, have proved to be far from perfect as they replicate and reinforce human stereotypes or measure pointless parameters. What is worse, given their obscure nature, these models end up limiting the understanding of employers’ strategies, jeopardising contestation. This also leads to an aggravation of societal inequalities.

In the past, forms of all-encompassing surveillance were used to make classifications and get a sense of workflow bottlenecks or deviant conducts (the use of data was eminently descriptive). Nowadays, a deeper dependence on inferential analytics, favoured by machine learning, helps managers to detect patterns and generate predictions about team dynamics, future behaviours, career prospects. At the same time, the overwhelming system of tacit penalty and reward is also expected to force compliance, thus reconfiguring interactions and nullifying autonomy. Therefore, workers’ choice is severely constrained. Katherine C. Kellogg and her co-authors argue that new models of algorithmic management are more “comprehensive, instantaneous, iterative and opaque” than before. From a labour law perspective, this allows employers a much more fine-grained, intrusive and adaptive form of control, which is not matched by increased powers of contestation for workers. Addressing this widespread augmentation in contractually unbalanced situations is vital for two main reasons. First, to escape a process of commodification of working relationships and dilution of corporate obligations. Second, to avoid that workers associate technology only with increased control and exploitation. Such a loss of trust could result in active resistance to new technology, and hence foreclose positive uses that increase competitiveness and workers’ well-being.

Platform work was only the appetiser. Algorithmic HR management is the icing on the cake

Besides permeating all aspects of our societies, technology is significantly rewiring workplaces and reshaping labour processes. The Covid-19 crisis has further accelerated a trend towards the digital transformation of managerial functions. Homeworking arrangements ramped up to limit infection risk, scattered teams resorted to collaborative platforms for project administration, interviews for new hires migrated online due to travel restrictions, academic centres began panic buying supervising software. In the last decade, platform workers have witnessed large-scale experimentation of such practices as rating, task allocation, incentivisation, customer reviews, gamification, which have now spiralled beyond the growing boundaries of this sector. Courts, inspectorates, and legislatures are effectively closing loopholes in enforcement, after years of perilous propaganda and uncertain litigation. The drawn-out fight on the appropriate legal classification of riders and couriers will probably end soon. The wildest inventions tested in this arena are instead here to stay.

Advanced technologies are not making humans redundant, they are making workers obedient and managers superfluous. There is an urgent problem to tackle. The existing limits to the expansion of managerial powers have been conceived when the potential of new techniques was admittedly unthinkable, at a time when supervision was exercised by humans in a direct, physical fashion. We are now witnessing an attempt to track sentiments and predict mood changes. This profound sophistication should encourage us to rediscover the prominent principles on which labour regulation is premised: human dignity at work, above all. And this should prompt an open discussion on the social convenience of algorithms at work. On closer inspection, perpetuating the techno-determinist narrative risks downplaying the much-needed collective scrutiny and bottom-up dialogue on ground-breaking innovation. As claimed by the OECD, “collective bargaining, when it is based on mutual trust between social partners, can provide a means to reach balanced and tailored solutions […] to emerging issues, and complement public policies in skills needs anticipation or support to displaced workers” in a flexible and pragmatic manner.

Data protection laws risk being a blunt weapon

Excitement, as well as anxiety around the patchy adoption of management by algorithms, are gaining momentum, and institutions seem aware of the ramifications of the expansion of managerial functions in warehouses, offices and apartments. Not only has the European Commission’s consultation on the legislative initiative on platform work tackled this issue, but also the Action Plan on the EU Pillar of Social Rights – a flagship political initiative – is tasked with untangling digitised management in order to reap their benefits while addressing its harmful consequences (the proposed aims is to “improve trust in AI-powered systems, promote their uptake and protect fundamental rights”).

However, current privacy standards may fall short of providing meaningful protection, if narrowly interpreted and applied. Since AI and algorithms are substituting for bosses in a variety of function, we need to deploy a wide-ranging set of initiatives to regulate, if not ban, automated decision-making.

While creating a strongly consistent framework, the General Data Protection Regulation has a limited scope and it has been designed to encourage data flow. Its key mandatory requirement in workplace relationship is to implement the least right-intrusive option available. Moreover, Article 8 of the Council of Europe’s Modernised Convention 108 can offer a more human rights-based shield against pervasive control.To ensure the quality of decision-making and improve working conditions, policymakers and social partners must revive the importance of anti-discrimination and occupational safety and health instruments. Notably, there is an urgency to challenge domination at work.

Regrettably, the ample set of principles informing data collection may be powerless in the face of the repurposing of information for less benign ends, or in cases when vendors’ pre-built or bespoke digital systems are rented from third parties. The introduction of modern technology assuming executive powers ought to be collectively regulated. Solutions must be systemic, and encompassing complementary tools based on the final use of algorithms. If they are meant to streamline workloads or reconfigure duties, occupational safety and health regulation comes into play to address physical and psychological risks. When choices are made about the competitive attribution of entitlements such as promotions, for instance through profiling, a modern understanding of antidiscrimination provisions is essential to avert prejudiced outcomes for women, younger and less-educated workers, minorities and vulnerable groups. This will lead to a renewed interested in these often-neglected instruments, which contain great potential in the new world of work.

Related articles:

- Turning the clock forward by Ursula Huws

- ‘Old’ rules and protections for the ‘new’ world of work by Sacha Garben

Read also:

- What digital future for what Social Democracy? by Evgeny Morozov